Emotion algorithms

To answer the question whether robots have feelings or not we must have some notion about the nature of feelings. What are feelings? Or emotions? Are they to be accounted for purely physically; the expression of electrochemical processes that take place within one’s body? Our psyche’s perception of the physiological activity taking place in our bodies when we are feeling that emotion?

To answer the question whether robots have feelings or not we must have some notion about the nature of feelings. What are feelings? Or emotions? Are they to be accounted for purely physically; the expression of electrochemical processes that take place within one’s body? Our psyche’s perception of the physiological activity taking place in our bodies when we are feeling that emotion?

In this view emotion is what we experience when we are carrying out the imperatives of nature. Emotion IS physiology. That was the view of William James. His idea is explored in the posting, Sense of Volition. Suppose we accept this mechanistic view. Then robots might have feelings!

Consider a game playing robot. It ‘decides’ which move to make in order to win. “Because I like to win, I play thusly,” the robot appears to declare as it makes its move. It seems ‘to know success.’ It appreciates winning. Within the computer program is embedded a ‘want-to-win.’ A desire.

Is the robot fooling us? You say, “The robot has no ‘desires’; that’s just the way he’s programmed.” In a computer we ascribe it’s apparently emotional behavior to the program; it’s how the program functions.

Is my ‘desire’ not how my ‘program’ functions? Perhaps we should accept the same architecture for a human; that what we call emotion is behavior executing some electrochemical program within us. Then the associated emotion is how that chemistry feels to us. That being the case, it may very well be that the computer being programmed to exhibit some behavior, ‘feels’ the associated emotion. Their very programs are the execution of emotional drives. The program itself contains the emotional content.

There is a strikingly insightful video about the nature of a computer in which Richard Feynman explains how a computer works. The essence of the matter has not to do with computation at all; but rather with the processing of data. The video is at: https://www.youtube.com/watch?v=EKWGGDXe5MA

Using Feynman’s paradigm we may perceive how emotion is embedded in a game-playing computer. So here is an elemental game to explore the idea. It is so simple that the entire tree of possible games is visually manageable.

I call this game, Four Quadrants. It’s a simpleton’s tic tac toe. Black or green fills some empty quadrant of the square in alternating sequence. Black starts. Black ‘wins’ if the top row is black. Otherwise it loses.

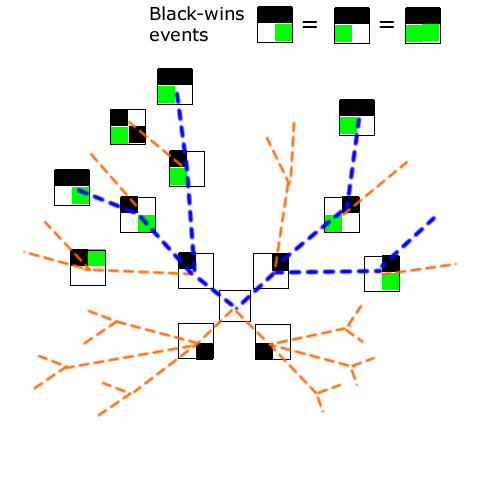

Here is the tree of all possibilities.

Each node of the tree represents the appearance of the four-quadrant board upon which a move is to be made. Examples of these board configurations are pictured at some of the nodes. The root of the tree is at the center where the game begins with no quadrants filled. The emanating branches are the moves made. They terminate in new nodes corresponding to new board appearances.

The blue lines represent the four ‘win’ paths. The twenty orange ones are all ‘lose paths’. Alternately first black and then green play using the overriding rule: at your turn, fill an empty quadrant with your color. This ‘rule’ is not learned, but programed.

For Green the choice of quadrant is made randomly. For Black the rule is more complicated. Black is programmed to ‘want to win’; to learn how to win. Only at the beginning does it choose randomly.

A game is played out. After three moves – black, green, black – the game ends. If the two upper squares are black the game is a win for black. If not the game is a loss. And the command ‘stop playing’ is encountered. A new game may begin.

Now the important thing is this: that all of the games are recorded in a data base. So, at each move, Black may consult that data base. He compares the current look of the board to its appearance in all of the past games ever played. Eventually there are many of these past games available. Black can query the data base as to whether a particular move led to a win or to a loss in past games. If it led to a win Black is directed to play it. Thus is Black’s passion to win produced by a program.

With this learning technique Black improves his chances of winning against a random-play opponent from only one time in six to a win two out of three times – pretty impressive.

But a human does not learn this way. A human learns a heuristic. In order to win, the heuristic that a human Black must learn is: Play the top row if you can. The computer ends up doing this but the idea is nowhere written in the program.

In the sense used by Daniel Kahneman (Thinking, Fast and Slow, 2011) a heuristic is a rule-of-thumb judgement that we rely upon to cope with pressing affairs. It is a prejudice or stereotype that we use to reach a rapid conclusion. When an immediate response is required we act using a heuristic rather than giving concentrated thought to the matter. Using a heuristic is what Kahneman calls a ‘System One’ response.

In computing, a heuristic has a different meaning: it is an algorithm that constrains possibility space. It is a search method to query a data base more effectively. A way to avoid going through every single one of all previous games – all the possibilities – in the data base so as to locate a winning move. An evident example from common experience is in looking up a word – say the word ‘quit.’ Rather than scanning every word in the dictionary we look only among the words beginning with the letter q. That is a heuristic algorithm. It saves a lot of effort in the data base search.

What is the relationship between the two? Between a prejudice in decision making and a restricted scan of a data base. Seems suggestive.

Comments

2 responses to “Robots’ Feelings”

Feelings seems like a loaded term to me in this context. I mean, there is feelings meaning a “sense”. For example “I feel like i am close to solving this problem” can be correlated with the idea of a game score. The game score that an application uses to guide if a current attempt to win is more successful than the last attempt does appear to have something in common with the feeling that one is making progress.

However, how does sadness or anger factor into this idea? How does one look at our range of emotions and see them as heuristic devices?

I FEEL like there is a relationship.

I suppose if a robot was coded to spend a significant amount of time developing one or more heuristics of its own then this robot might experience feelings when it’s heuristics are proven fallible by a few silly humans. However in most cases a robot is using heuristics developed by a human and that human is experiencing emotions in the background.

It’s sort of a game of religion; are you willing to believe in my heuristic based ecosystem I call church? If not I might have to find new methods for compliance with the religious heuristic ecosystem. Part of the heuristic ecosystem is to be blind to the obvious… its time to comply.